The Ethical Framework: The Human-in-the-Loop

I. The Unaddressed Risk of Strategic Autonomy

The proliferation of autonomous AI systems in strategic decision-making has introduced a profound governance gap that most organizations have yet to acknowledge, let alone address. When artificial intelligence operates without transparent oversight—particularly in domains involving pricing strategy, market positioning, resource allocation, or competitive response—it creates compounding layers of institutional risk that extend far beyond technical malfunction.

Algorithmic risk manifests in multiple forms: embedded bias that perpetuates discriminatory outcomes, model drift that silently degrades decision quality over time, and emergent behaviors that deviate from intended parameters without detection. These are not hypothetical concerns; they represent documented failure modes across financial services, healthcare, and enterprise operations where opacity met consequence.

Equally critical is strategic risk—the erosion of human fiduciary control. When executives delegate strategic authority to systems they cannot interrogate, challenge, or override with confidence, they abdicate their fundamental responsibility as organizational stewards. The result is a dangerous inversion: humans become reactive monitors of machine decisions rather than proactive governors of strategic direction.

The prevailing industry response—post-hoc audits, compliance checkboxes, and vague commitments to “responsible AI”—is architecturally insufficient. Trust cannot be assumed; it must be engineered. This requires embedding governance mechanisms at the foundational level of AI systems, not applying them as superficial layers after deployment. The question facing executive leadership is not whether AI will drive strategic advantage, but whether that advantage will be achieved through accountable, explicable, human-governed systems—or through opaque automation that concentrates risk while diffusing responsibility.

II. The Elevion Governance Principle: Human-in-the-Loop (HIL)

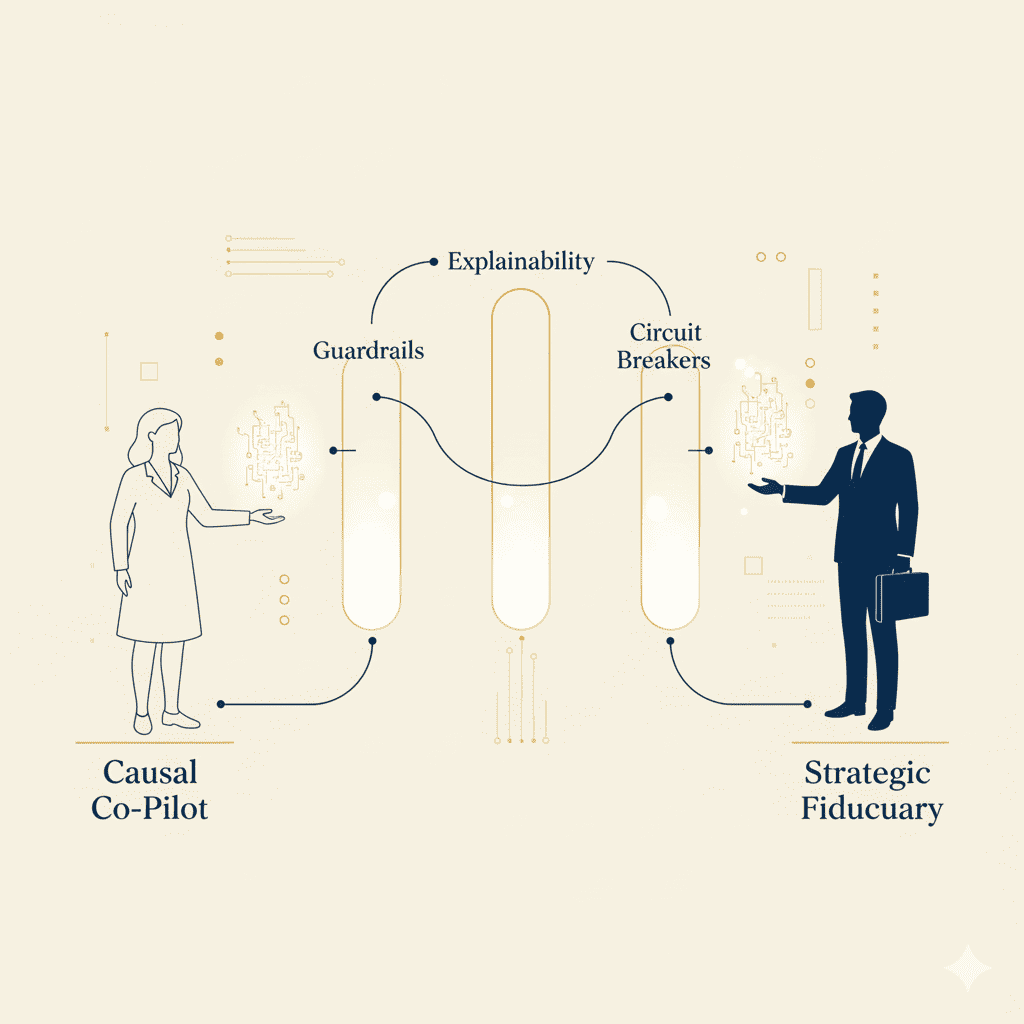

Elevion’s ethical architecture rests on a foundational principle: the AI serves as the Causal Co-Pilot, while the human executive retains the role of Strategic Fiduciary. This is not a mere philosophical distinction—it is a governance mandate encoded into every layer of our predictive infrastructure.

The Human-in-the-Loop (HIL) model establishes that no strategic recommendation becomes operational without informed human authorization. This framework is supported by Three Pillars of Trust that operationalize accountability:

- Explainability (XAI) – Transparency as Infrastructure

Every strategic recommendation generated by the Elevion platform is accompanied by causal reasoning chains that detail why the system reached its conclusion. We employ Explainable AI (XAI) methodologies—including SHAP values, causal pathway visualization, and counterfactual analysis—to surface the specific variables, market signals, and probabilistic weights driving each output. Executives receive not just predictions, but auditable logic that enables informed evaluation and challenge. - Accountability and Guardrails – Constraints by Design

Strategic autonomy without boundaries is strategic abdication. Elevion embeds configurable guardrails that enforce organizational values, regulatory requirements, and risk tolerances directly within the decision architecture. These include parametric constraints (e.g., maximum price variance thresholds), ethical filters (e.g., prohibition of discriminatory segmentation), and alignment checks that flag recommendations inconsistent with stated corporate principles. Governance is not retrospective—it is preventive. - Safety and Circuit Breakers – Fail-Safe Mechanisms

Trust requires the capacity for intervention. Elevion incorporates automatic deactivation triggers that halt AI-driven strategies when confidence thresholds degrade, when external conditions exceed modeled scenarios, or when anomaly detection systems identify divergence from expected patterns. Additionally, executives maintain manual override authority at all times, ensuring that human judgment supersedes computational output under conditions of uncertainty, ethical ambiguity, or strategic redirection.

This architecture establishes a fiduciary relationship between executive and system: the AI amplifies analytical capacity and pattern recognition, but strategic authority—and accountability—remain unambiguously human.

III. The Trust Architecture in Practice

The governance framework is not theoretical—it is structurally integrated into Elevion’s Predictive Engine across all strategic domains. Consider a representative use case: dynamic pricing optimization for a multi-SKU portfolio.

The AI analyzes demand elasticity, competitive positioning, inventory levels, and market sentiment to recommend pricing adjustments. Rather than auto-executing these changes, the system presents:

Causal Explanation: “Price reduction recommended based on 78% probability of volume uplift driven by competitive undercut detected in Region 3, inventory aging risk in SKU-127, and sentiment decline correlated with recent service delays.”

Guardrail Status: “Recommendation complies with anti-predatory pricing policy, maintains minimum margin threshold of 18%, and passes equity audit for demographic neutrality.”

Confidence and Circuit Breaker Metrics: “Model confidence: 82%. Scenario coverage: 91%. Auto-deactivation will trigger if competitor response deviates >15% from historical patterns or if margin erosion accelerates beyond 2% weekly.”

The executive reviews, challenges assumptions, adjusts parameters if necessary, and authorizes—or declines—the strategy. The AI informs; the human decides. This preserves agility while anchoring accountability.

Crucially, the Ethical Framework is not a peripheral add-on—it is a core structural component of the Predictive Engine itself. Strategic Guardrails operate as embedded constraints within the optimization algorithms. Automatic Deactivation Triggers function as circuit breakers within the execution layer. Explainability modules generate causal narratives in real time, not post-analysis. This ensures that governance operates at system speed, not audit speed.

IV. Engage the Fiduciary

The question before executive leadership is direct: Will you entrust strategic decision-making to systems that cannot explain their reasoning, cannot be constrained by your values, and cannot be stopped when they diverge from intended outcomes?

The era of “black box optimization” is incompatible with fiduciary responsibility. As AI becomes more deeply embedded in competitive strategy, the obligation to demand transparency, enforce accountability, and maintain human sovereignty over strategic direction becomes non-negotiable.

Elevion’s Human-in-the-Loop architecture represents a categorical departure from prevailing practice—not because it limits AI capability, but because it structures that capability within a framework of engineered trust. We challenge you to hold every strategic partner, every predictive platform, and every autonomous system to this standard.

Governance is not the cost of AI adoption. It is the condition of AI trust.